Its not loading for me as well ;;

Whoops I broke it. Just a moment.

Edit: should be good now

This is still not loading for me

Just now started loading for me.

Yup loading now… im surprised that between the time you posted that and now that another 18 planets rolled…

1196 planets found… as of 9:35am est

The data is cached for one hour so there’s that but it’s still a ton

According to the update notes, type selection was added to the world builder today. I’m sure people are playing with that.

Hi, I am really fond of three data extraction and display function!

There are (as always) three questions

1/ Is is possible to add a "select planet(s) by name(-mask/pattern)?

2/ The “is editable” property is shown as “no” even when I have activated the “allows to modify blocks…” in the world controller. Is this property something different?

3/ A sovereign / creative planets are orbiting around a planet with 1 blinksecs. Which planet this is would be of some help setting up portals. Is this info somewhere obtainable?

Good work!

- I had a filter for planet name there but it wasn’t getting used much so I removed it. I can add it back if you’d like.

- You have the right property but your planet likely needs a new scan from someone with the BoundlessProxyUI - Help track Sovereign World Colors + More! tool.

- Closest planet (assigned planet) was displayed beneath the planet name but it appears I must have accidentally removed it. Adding it back now.

FYI the buttons in the top-right of each planet are styled a little wonky right now everyone. I’ll be fixing it shortly.

Since getting a 504 gateway error from the dump endpoint seems to be a common thing, would it be possible to get some kind of error handling for that?

I’m getting an error today

“Unfortunately, something went wrong. Click below to try again or refresh the page…”

I tried a hard refresh.

Taking a look right now.

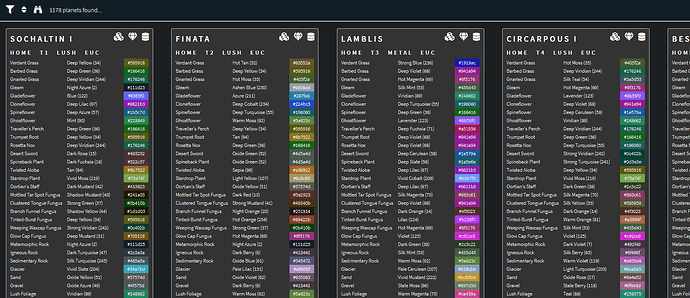

Edit: It loads up for me:

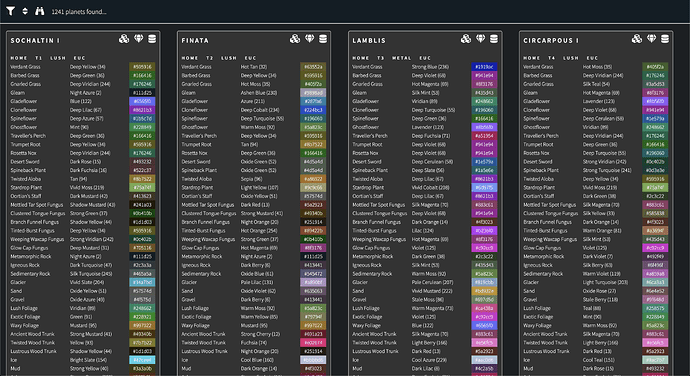

One thing worth mentioning is that the data I’m using is now up to 18MB in size (I’m going to have to solve this problem sooner than later because 18MB is already way too much to transfer for most folks). That data gets cached on the API side once every hour and it takes almost 2 minutes to DO the caching. During those 2 minutes, people will see the error message and there’s nothing I can really do about that. Just try again in a few minutes.

Yup, I think its time to cut down on the reliance of Javascript a bit and process/cache the 18MB of data server-side and paginate it server-side before serving it to users.

Pagination is already there server-side afaik but the API isn’t set up for the kind of filtering I’m doing client-side. The filtering and sorting I’m providing folks is purely client-side and requires that I have ALL of the data. There are two ways forward as far as I see it:

- Add the filtering and sorting options that I’m currently doing client-side and offer them up as part of the API instead.

- Add a PS Planet Explorer-specific endpoint for retrieval of all worlds + blocks colors + latest poll and trim out everything that is absolutely not needed to cut down the data size as much as we can.

1 is probably the better approach but it will require @Angellus to do a significant amount on adding the necessary filters and sorting. It would also tightly couple @Angellus and I going forward such that i I want to add new features to the Planet Explorer, it would always have to be done in tandem with a new feature added to Boundlexx.

So fetching the data from the api and processing it the way you need it server side (on portalseekers.com) is out of the question? Reason why I say that is then you can format the data the way YOU need it, and not require @Angellus to continue changing the api.

Processing it and caching it server side (on portalseekers.com) would GREATLY reduce the stress on @Angellus servers as well.

Sure I can cache it server side on my end but then you’ve got double caching and you’re going to have greatly out of date data. So why even have a tool and why even have an API?

define double caching. I don’t think the API itself caches its data.

Angellus is already caching the data I’m using for an hour. The data I retrieve is anywhere from 1-60 minutes old. This is why active exos don’t show up right away in my tool. Now I can get around that particular problem by making a separate API call on load to check explicitly for exos but you get the point.

If I add server-side caching on my end (i.e. stand up a MySql database to store the data and then cache stuff there and use it to pull data into my app) then we have double-caching.